With quasi-stable dynamic reservoirs, the effect of any given input can persist for a very long time. Reservoir-Type RNNs Are Insufficient for A Few Reasons This sub-field of computer science is called reservoir computing, and it even works (to some degree) using a bucket of water as a dynamic reservoir performing complex computations. Learning is limited to that last linear layer, and in this way, it's possible to get reasonably OK performance on many tasks while avoiding dealing with the vanishing gradient problem by ignoring it completely. Recurrent feedback and parameter initialization is chosen such that the system is very nearly unstable, and a simple linear layer is added to the output. For RNNs, one early solution was to skip training the recurrent layers altogether, instead of initializing them in such a way that they perform a chaotic non-linear transformation of the input data into higher dimensional representations. The Development of New Activation Functions in Deep Learning Neural Networksįor deep learning with feed-forward neural networks, the challenge of vanishing gradients led to the popularity of new activation functions (like ReLUs) and new architectures (like ResNet and DenseNet). Back-propagating through time has the same problem, fundamentally limiting the ability to learn from relatively long-term dependencies.

#Sequential model lstm series#

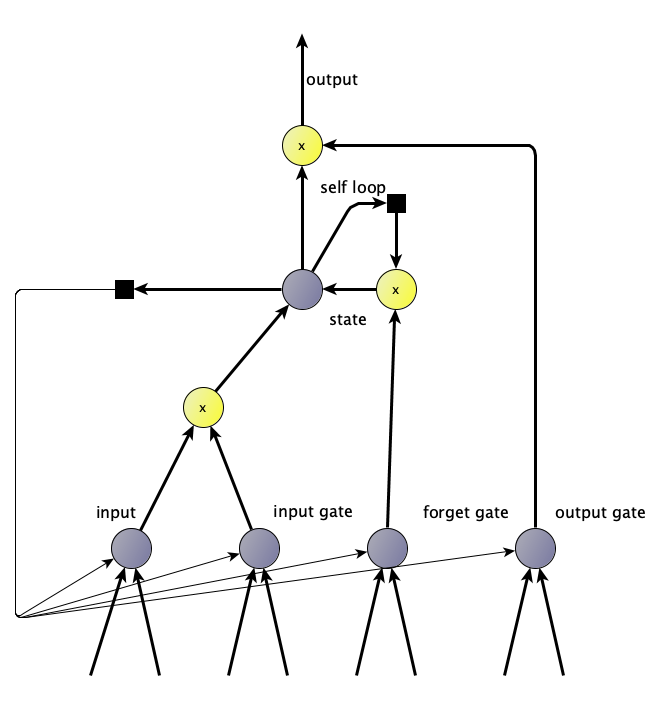

Without going into too much detail, the operation typically entails repeatedly multiplying an error signal by a series of values (the activation function gradients) less than 1.0, attenuating the signal at each layer. Learning by back-propagation through many hidden layers is prone to the vanishing gradient problem. The challenge is that this short-term memory is fundamentally limited in the same way that training very deep networks is difficult, making the memory of vanilla RNNs very short indeed. This arrangement can be simply attained by introducing weighted connections between one or more hidden states of the network and the same hidden states from the last time point, providing some short term memory. Nautilus with decision tree illustration.Ī standard RNN is essentially a feed-forward neural network unrolled in time. For this, machine learning researchers have long turned to the recurrent neural network or RNN. When learning from sequence data, short term memory becomes useful for processing a series of related data with ordered context. Other examples of sequence data include video, music, DNA sequences, and many others. There are many instances where data naturally form sequences and in those cases, order and content are equally important. a set of images that map to one class per image (cat, dog, hotdog, etc.). This is the essence of supervised deep learning on data with a clear one to one matching, e.g.

After learning from a training set of annotated examples, a neural network is more likely to make the right decision when shown additional examples that are similar but previously unseen. In neural networks, performance improvement with experience is encoded as a very long term memory in the model parameters, the weights. One definition of machine learning lays out the importance of improving with experience explicitly:Ī computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E. Using past experience for improved future performance is a cornerstone of deep learning, and indeed of machine learning in general. The Primordial Soup of Vanilla RNNs and Reservoir Computing

0 kommentar(er)

0 kommentar(er)